Need for regularization

Machine learning models tend to overfit, making accurate predictions on trained data but inaccurate ones on new examples. Regularization helps prevent overfitting by shrinking the parameters through a regularized term added to the cost function.

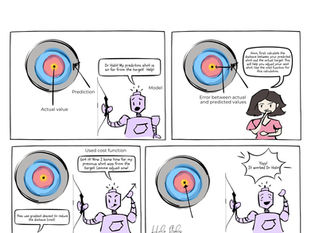

Check out my comic that explains regularization in a fun and simple way!

Regularization calculation

Let's take an example of a linear regression model.

From my cost function blog, we can define the minimization of the linear regression cost function as:

Where,

J(θ) - cost function of linear regression

M - number of training examples

hθ(xi) - predicted output for the i-th input x, given the parameters θ

θ - parameters like model weights

yi - actual output for the input xi

As we can see, in the overfitting graph there are too many parameters.

We need to multiply the θ3 and θ4 values with a number to make it really small.

Therefore, θ3 and θ4 become negligible as the min function is present.

Now, the generalized equation for linear regression regularization can be written as:

Where,

λ - regularization parameter (penalizing value)

n - number of parameters

Similar to the linear regression example we've taken, any function can be regularized by adding a shrinking term.

Hope you've understood the concept of regularization! Let me know what you think :)

Awesome!